This post explains how to setup and integrate GitLab CI and GCP Cloud Run in order to provide an efficient CI/CD pipeline.

I wanted to deploy a simple API-server for the previously mentioned React App. The API-server is a NodeJS application, which exposes a REST API without any authentication and authorization requirements for now. I selected GCP Cloud Run to host the service.

I chose GCP Cloud Run, due to its simplicity and its serverless characteristics. Serverless is a good fit for this application, as it is currently in a comparatively early stage and will not be used much. An offering, where the application would run 24/7 would be an overkill for the use-case. Cloud Run is simple, as it allows to deploy Containers without me taking care of the complexities of a Kubernetes Cluster.

This blog post will explain how to set up GitLab CI to create a CI/CD pipeline for this API-server, which continuously deploys to staging and production environments.

Prerequisites on GCP

I created a Service Account, which I use to deploy automatically.

The Service Account got assigned to a custom role, where this custom role has the following permissions:

iam.serviceAccounts.actAs(necessary to deploy to Cloud Run)run.services.create(necessary to deploy to Cloud Run)run.services.get(necessary to deploy to Cloud Run)run.services.list(necessary to get the URI of the Service)run.services.update(necessary to deploy to Cloud Run)storage.buckets.get(necessary to push the Docker image to GCP)storage.multipartUploads.abort(necessary to push the Docker image to GCP)storage.multipartUploads.create(necessary to push the Docker image to GCP)storage.multipartUploads.list(necessary to push the Docker image to GCP)storage.multipartUploads.listParts(necessary to push the Docker image to GCP)storage.objects.create(necessary to push the Docker image to GCP)storage.objects.delete(necessary to push the Docker image to GCP)storage.objects.list(necessary to push the Docker image to GCP)

You will need to store the access credentials for this Service Account within GitLab. See The deploy stage for details.

GitLab CI/CD pipeline

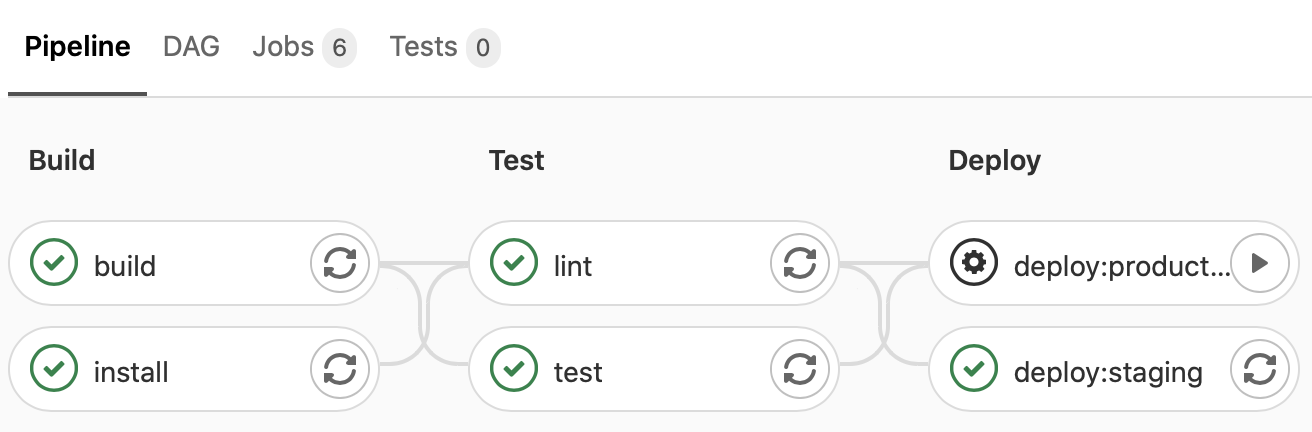

The CI/CD pipeline consists of three stages: build, test and finally deploy.

The build stage

In the build stage, we build the Container using kaniko.

Kaniko is a tool which allows to build a Container without the need of privileged mode.

What I also like about kaniko is how it does caching to speed up building.

I use the Container registry of GitLab, as it is easy to work with.

The build stage also has an install job.

This job is responsible for installing the NodeJS packages and caching the node_modules folder.

install:

stage: build

image: node:14.9-alpine3.12

script:

- npm install

cache:

key:

files:

- package-lock.json

paths:

- node_modules/

build:

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

variables:

DOCKER_IMAGE: $CI_REGISTRY_IMAGE/$CI_COMMIT_REF_SLUG:$CI_COMMIT_SHA

script:

- echo "{\"auths\":{\"$CI_REGISTRY\":{\"username\":\"$CI_REGISTRY_USER\",\"password\":\"$CI_REGISTRY_PASSWORD\"}}}" > /kaniko/.docker/config.json

- /kaniko/executor

--context $CI_PROJECT_DIR

--dockerfile $CI_PROJECT_DIR/Dockerfile

--cache=true

--cache-repo=$CI_REGISTRY_IMAGE/cache

--destination $DOCKER_IMAGE

stage: build

The test stage

The test stage consists of basic test and lint jobs.

Both are reusing the cached node_modules folder of the install job.

lint:

stage: test

image: node:14.9-alpine3.12

before_script:

- npm install

needs: ["install"]

cache:

key:

files:

- package-lock.json

policy: pull

paths:

- node_modules/

script:

- npm run lint

test:

stage: test

image: node:14.9-alpine3.12

before_script:

- npm install

needs: ["install"]

cache:

key:

files:

- package-lock.json

policy: pull

paths:

- node_modules/

script:

- npm run test

The deploy stage

For Cloud Run, we need to push the Container to the GCP Container Registry before we can deploy it.

In the deploy job, we first need to authenticate for both Container registries. This means for GitLab Container Registry and GCP Container Registry.

$GOOGLE_APPLICATION_CREDENTIALS must be set in the GitLab CI/CD Variables config with the type File.

docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

gcloud auth activate-service-account --key-file $GOOGLE_APPLICATION_CREDENTIALS

gcloud config set project $GCP_PROJECT

gcloud auth configure-docker --quiet

Then we pull the image from the GitLab Container Registry and push it over to GCP.

docker image pull $DOCKER_IMAGE

export GCP_PROJECT_ID="$(gcloud config get-value project)"

export GCP_DOCKER_IMAGE="gcr.io/$GCP_PROJECT_ID/$CI_COMMIT_REF_SLUG"

docker image tag $DOCKER_IMAGE $GCP_DOCKER_IMAGE

docker image push $GCP_DOCKER_IMAGE

And after that, we can deploy it to Cloud Run.

gcloud run deploy $GCP_SERVICE --image $GCP_DOCKER_IMAGE --platform managed --region $GCP_REGION

I use GitLab to manage environments and deployments. For this we need to derive the environment URL.

echo "ENVIRONMENT_URL=$(gcloud run services list --platform managed --region $GCP_REGION | grep $GCP_SERVICE | awk '{print $4}')" >> deploy.env

deploy:staging:

image: google/cloud-sdk:alpine

stage: deploy

services:

- docker:19.03-dind

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

- gcloud auth activate-service-account --key-file $GOOGLE_APPLICATION_CREDENTIALS

- gcloud config set project $GCP_PROJECT

- gcloud auth configure-docker --quiet

script:

- docker image pull $DOCKER_IMAGE

- export GCP_PROJECT_ID="$(gcloud config get-value project)"

- export GCP_DOCKER_IMAGE="gcr.io/$GCP_PROJECT_ID/$CI_COMMIT_REF_SLUG"

- docker image tag $DOCKER_IMAGE $GCP_DOCKER_IMAGE

- docker image push $GCP_DOCKER_IMAGE

- gcloud run deploy $GCP_SERVICE --image $GCP_DOCKER_IMAGE --platform managed --region $GCP_REGION

- echo "ENVIRONMENT_URL=$(gcloud run services list --platform managed --region $GCP_REGION | grep $GCP_SERVICE | awk '{print $4}')" >> deploy.env

environment:

name: staging

url: $ENVIRONMENT_URL

artifacts:

reports:

dotenv: deploy.env

dependencies:

- build

variables:

GIT_STRATEGY: none

DOCKER_IMAGE: $CI_REGISTRY_IMAGE/$CI_COMMIT_REF_SLUG:$CI_COMMIT_SHA

DOCKER_HOST: tcp://docker:2375

GCP_PROJECT: my-staging-project

GCP_REGION: europe-west1

GCP_SERVICE: api-server

only:

- master

deploy:production:

extends: deploy:staging

environment:

name: production

variables:

GCP_PROJECT: my-production-project

when: manual

Summary & outlook

Deploying GCP Cloud Run is pretty straight-forward.

What I find quite tedious is that GCP needs one to provide the region of a service in each command or at all. It would be great, if the GCP CLI would derive the right region automatically.

I also did not find any way to pull an image and to push it to another Container Registry without privileged mode and Docker-in-Docker.

Looking forward: To enable Authentication and authorization, I’m considering to try GCP API Gateway.

Complete .gitlab-ci.yml for reference

stages:

- build

- test

- deploy

install:

stage: build

image: node:14.9-alpine3.12

script:

- npm install

cache:

key:

files:

- package-lock.json

paths:

- node_modules/

build:

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

variables:

DOCKER_IMAGE: $CI_REGISTRY_IMAGE/$CI_COMMIT_REF_SLUG:$CI_COMMIT_SHA

script:

- echo "{\"auths\":{\"$CI_REGISTRY\":{\"username\":\"$CI_REGISTRY_USER\",\"password\":\"$CI_REGISTRY_PASSWORD\"}}}" > /kaniko/.docker/config.json

- /kaniko/executor

--context $CI_PROJECT_DIR

--dockerfile $CI_PROJECT_DIR/Dockerfile

--cache=true

--cache-repo=$CI_REGISTRY_IMAGE/cache

--destination $DOCKER_IMAGE

stage: build

lint:

stage: test

image: node:14.9-alpine3.12

before_script:

- npm install

needs: ["install"]

cache:

key:

files:

- package-lock.json

policy: pull

paths:

- node_modules/

script:

- npm run lint

test:

stage: test

image: node:14.9-alpine3.12

before_script:

- npm install

needs: ["install"]

cache:

key:

files:

- package-lock.json

policy: pull

paths:

- node_modules/

script:

- npm run test

deploy:staging:

image: google/cloud-sdk:alpine

stage: deploy

services:

- docker:19.03-dind

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

- gcloud auth activate-service-account --key-file $GOOGLE_APPLICATION_CREDENTIALS

- gcloud config set project $GCP_PROJECT

- gcloud auth configure-docker --quiet

script:

- docker image pull $DOCKER_IMAGE

- export GCP_PROJECT_ID="$(gcloud config get-value project)"

- export GCP_DOCKER_IMAGE="gcr.io/$GCP_PROJECT_ID/$CI_COMMIT_REF_SLUG"

- docker image tag $DOCKER_IMAGE $GCP_DOCKER_IMAGE

- docker image push $GCP_DOCKER_IMAGE

- gcloud run deploy $GCP_SERVICE --image $GCP_DOCKER_IMAGE --platform managed --region $GCP_REGION

- echo "ENVIRONMENT_URL=$(gcloud run services list --platform managed --region $GCP_REGION | grep $GCP_SERVICE | awk '{print $4}')" >> deploy.env

environment:

name: staging

url: $ENVIRONMENT_URL

artifacts:

reports:

dotenv: deploy.env

dependencies:

- build

variables:

GIT_STRATEGY: none

DOCKER_IMAGE: $CI_REGISTRY_IMAGE/$CI_COMMIT_REF_SLUG:$CI_COMMIT_SHA

DOCKER_HOST: tcp://docker:2375

GCP_PROJECT: my-staging-project

GCP_REGION: europe-west1

GCP_SERVICE: api-server

only:

- master

deploy:production:

extends: deploy:staging

environment:

name: production

variables:

GCP_PROJECT: my-production-project

when: manual